Software performance guru Martin Thompson (@mjpt777) gave an illuminating talk on event-sourced architectures, and why event-driven, state-machine designs are the way forward for complex, multi-path software systems (“Event Sourced Architectures and what we have forgotten about High-Availability”, [slides: 700KB PDF]).

State Machines and Message Queues

Martin began by reviewing how state-machines work: move from one good known state to another good known state.

<rant>This should be basic undergraduate stuff, but although institutions such as Imperial College and University of Reading include such topics, many more university ‘computer science’ courses appear not to, focussing instead on games programming and IT for business; valuable, but surely not at the expense of core knowledge such as state machines. </rant>In the context of state machines, it was interesting to hear about the IBM IMS TM, the transaction manager which formed part of an inventory management system written by IBM to manage the bill of materials for the Apollo space program. This system used a transactional queue and event-sourcing, and achieved over 2300 transactions per second… on 1960s hardware. The same architectural model is still in use by IMS TM today, over 40 years later.

[Image of Tandem NonStop from http://www.istanbulcar.com/]

Likewise, the Tandem NonStop series of fault-tolerant computers – built from the 1970s onwards by a company eventually acquired by HP – used (in addition to multiple redundant CPUs and other components) an ‘event sourced’ messaging system:

NonStop processors cooperate by exchanging messages across a reliable fabric, and software takes periodic snapshots for possible rollback of program memory state.

The underlying ‘shared-nothing’ architecture and use of event-sourcing allowed the NonStop machines to keep the same basic design for over 30 years; this shows how scalable are event-sourced architectures.

What is Event Sourcing?

A renewed interest since 2000 in these proven but often overlooked techniques led Martin Fowler to describe event sourcing in 2005 as: Capture all changes to an application state as a sequence of events. Martin Thompson’s take on event sourcing is: Apply a sequence of change events to a model in order. Both definitions include the concept of an ordered stream of events, replayed to other nodes. If this string of events can be made to be processed by more than one target node, Martin noted in the session, the result can be the foundation of a highly available (HA) system.

Modern web engines such as Node.js and Nginx use event sourcing as their processing model. Event sourcing is effectively already present in most database systems today, certainly any that use log shipping as part of their HA setup (Oracle RAC, MySQL Cluster, Microsoft SQL Server in Mirror mode or AlwaysOn mode): a database log streamed to active or passive cluster nodes acts as an event source, as the discrete database events must be applied in order, one after the other. Thus when Martin claims that (I paraphrase here) “the key thing in Enterprise database systems is replication“, he means any kind of data duplication via a serial/ordered stream of data.

Replication models for resilience

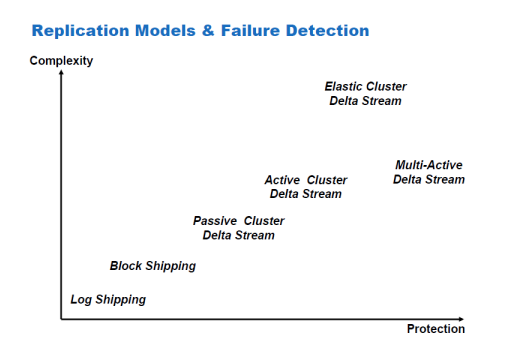

Different kinds of data replication allow systems to detect and recover from different kinds of errors. Broadly speaking (and not surprisingly) the more complex the replication, the better the protection from errors, but at higher processing cost:

[Image from Martin’s slide deck]

In order of increasing complexity and resilience, the schemes outlined by Martin were:

- Log shipping

- Reliable, but must wait for OS-level operations to complete, such as stream flush(); this can cause delays or data loss if the flush() is infrequent.

- Block shipping

- This operates at a lower layer than log shipping, and can be OS-agnostic. However, it is still prone to single-node failures.

- Passive cluster with a delta stream

- This allows a secondary node to take over if the primary node fails.

- Active cluster with a delta stream

- Deals with “Split-brain” issues (e.g. network failure)

- Nodes use a distributed lock – the first one of the pair to get the lock wins, the other waits on the lock.

- Elastic cluster with delta stream

- This scheme can bring in additional machines to add processing capability (e.g. after a failure or to increase throughput)

- Multi-active with delta stream

- This is the most resilient model, but requires control over the hardware and use of IP multicast

As the level of the resilience required increases, so clearly does the complexity of the replication required to provide that resilience.

Key Design/Test Principles

We reviewed some useful design and testing principles to help achieve reliable systems:

- Use pre- and post-conditions:

- Basic sound engineering practice which allows fast failure and self-consistency within processing operations.

- Asserting pre- and post-conditions provides a declarative way to identifying expected state.

- Log Replay: capturing logs from production infrastructure and using these to generate traffic/load/request patterns/behaviour against test infrastructure

- The production logs are ‘replayed’ in whole or in part and the effects analysed.

- As Jimmy Bogard puts it: “if you’re trying to understand why the current state is what it is, the final state is not nearly as useful as the series of events that led to that endpoint”

- “In-memory asynchronous designs give great performance”

- Avoid writing data to spinning metal disks if you can

- “Suggest you use multicast for HA” so that you do not need virtual IP addresses or cluster logic in the client

- IP multicast is probably beyond the reach of most web-based systems at present, but widely used in high-speed, low-latency financial systems

- “Ugly” does not appear if you can keep things in lockstep

- VMware ESX 4 has a feature called Lockstep, in which events from the primary VM are replayed to a secondary VM. This works only with a single vCPU; synchronizing more than one CPU would be significantly more complex.

Martin’s expectation was that the point-to-point nature of many of today’s web protocols (e.g. HTTP) will begin to be seen as a major limitation, at least within a logical data centre, and IP multicast will start to look increasingly attractive as a way to avoid the need for clusters, load-balancers and similar failure-prone components.

Scaling event Sourced Architecture

To round off, we looked briefly at three techniques which can help to make event-sourced architectures scale:

[Image from http://www.udidahan.com/2009/12/09/clarified-cqrs/]

- Command Query Responsibility Segregation (CQRS): establish a clear separation between ‘read’ and ‘write’ responsibilities.

- A few years ago I helped design and build a large content aggregation and syndication system for a global travel company. Although we didn’t call it CQRS at the time, we did use a similar pattern, and it greatly reduced the complexity in the system.

- CQRS and Event Sourcing go hand-in-hand for large, data-rich, transactional systems.

- Shards: split up data into separate locations/nodes by a sensible boundary (week, initial letter, geographic area, etc.)

- Break down complex transactions into simpler state machines; avoid distributed transaction coordinators (DTCs) like the plague!

Summary

For me, Martin’s session trigged some fascinating investigations deeper into aspects of event sourcing, replication and CQRS in particular. If you get the chance to hear Martin speak, I thoroughly recommend you go and hear him.

The video of Martin’s QCon talk is now online here:

http://www.infoq.com/presentations/Event-Sourced-Architectures-for-High-Availability